When Intelligence Meets Accountability

The pharmaceutical industry stands at a crossroads.

AI promises to accelerate discovery, optimize manufacturing, and sharpen commercial strategies.

But there’s a single word standing between ambition and adoption – trust.

Pharma’s need for speed is colliding head-on with the demand for compliance, transparency, and patient safety. The result? A landscape where innovation is possible, but only if it’s accountable.

Here’s the truth:

- An AI model that can’t be explained can’t be approved.

Regulators want traceability, not guesswork. - An AI model that can’t be audited can’t be scaled.

Quality teams need evidence, not anecdotes. - An AI model that can’t be defended can’t be deployed.

Commercial and clinical leaders need confidence, not black-box mystery.

AI’s potential in pharma is enormous — but without governance, controls, and human-aligned oversight, potential becomes exposure.

“AI without trust isn’t intelligence — it’s noise.”

— Perceptive Analytics Leadership Team

Read more about decision intelligence in pharma — Beyond Dashboards: The Rise of Decision Intelligence in Pharma

The Core Challenge: Speed vs. Intelligibility

Pharma leaders are under immense pressure — get drugs to market faster, run leaner, and respond in real time.

Yet regulators are tightening the screws.

AI is no longer a sandbox experiment. It’s under the spotlight.

Regulators are setting the new playbook:

- EU AI Act (2025): Classifies most pharma AI as “high risk,” requiring human oversight, documentation, and continuous monitoring.

- FDA Guidance on AI/ML: Demands a clear plan for how models evolve safely — the Predetermined Change Control Plan (PCCP).

- EudraLex Annex 11 & 22: Mandates validation, explainability, and test data integrity for every AI model in use.

The message is clear:

You can’t just plug in AI and hope for the best.

You must prove it works — safely, transparently, and consistently.

Learn how real‑time AI is unifying pharma decisions — How AI Is Unifying Pharma Decisions in Real Time

The Leadership Dilemma

Every pharma executive faces the same question:

How do we move fast enough to stay ahead — without moving so fast we break trust?

The answer isn’t “slow down.”

It’s build smarter.

Responsible AI isn’t about restricting innovation — it’s about protecting its credibility.

The Solution: A Framework for Trusted AI

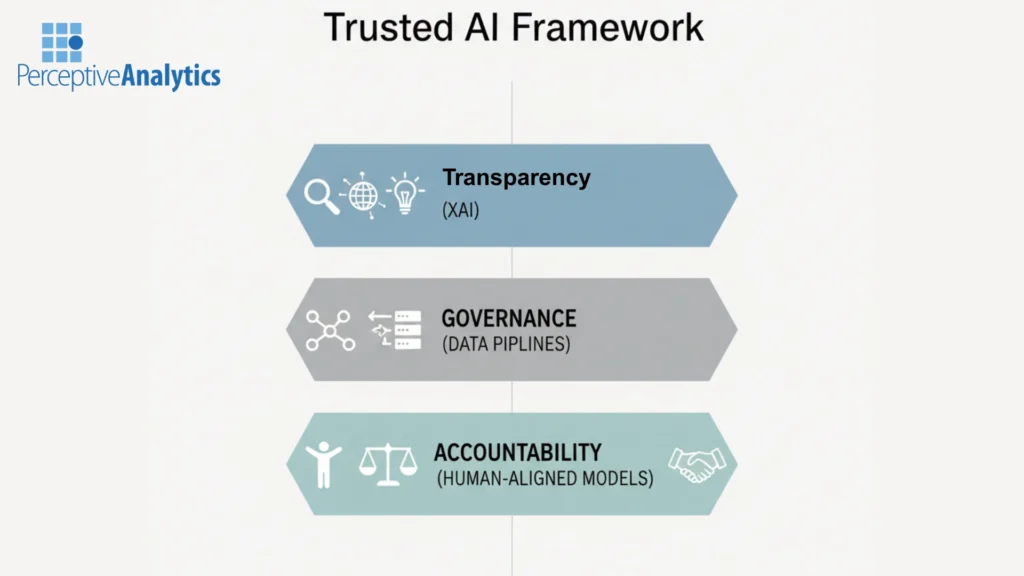

At Perceptive Analytics, we’ve built a clear framework for AI that’s not only fast, but faithful —

AI that can be trusted, traced, and tested.

Our approach stands on three pillars:

1. Explainable AI (XAI) — Because the Black Box Is Dead

Trust begins with understanding.

XAI makes complex models transparent — showing why a decision was made, not just what the decision is.

- For Scientists:

In stability testing, XAI doesn’t just predict shelf life — it reveals which chemical markers are driving degradation.

Insight becomes explanation. - For Quality Teams:

When a deviation triggers an alert, XAI identifies the exact sensor or process variable responsible.

Suddenly, an alarm becomes a diagnosis.

2. Governed Data Pipelines — Because Trust Starts with Data

You can’t build reliable AI on unreliable data.

Our governed data pipelines ensure integrity, accuracy, and full lineage from source to decision.

- Data Provenance: Every data point is traceable to origin.

- Data Quality: Automated validation ensures accuracy before any model touches it.

- Data Security: Strong access controls protect sensitive patient and manufacturing data — compliant with GDPR, HIPAA, and beyond.

3. Human-Aligned Models — Because AI Should Serve People, Not Replace Them

We design AI that works with human experts, not around them.

- Human-in-the-Loop: Critical decisions always have a qualified reviewer.

- Bias Detection: Continuous testing removes bias and ensures fairness in clinical and commercial predictions.

- Model Monitoring: Algorithms evolve — and we make sure they evolve responsibly, with retraining triggers and audit trails.

“AI shouldn’t just predict — it should explain itself.”

— Chief Data Scientist, Global Biopharma Partner

Case Insight: Explainable AI in Stability Testing

A leading biopharma company faced a recurring issue — inconsistent stability test results that prolonged shelf-life studies and slowed down regulatory approvals.

We implemented an explainable predictive model that not only forecasted degradation with higher accuracy, but also surfaced the five key process variables driving those outcomes.

The impact was immediate and cross-functional:

- Scientists gained visibility into the “why.”

Instead of relying on opaque predictions, they could trace degradation patterns back to specific drivers. - Manufacturing refined its processes.

Targeted adjustments improved stability outcomes and reduced rework. - Regulatory teams strengthened their submissions.

Transparent, interpretable evidence made compliance discussions smoother and faster.

When AI is transparent, it doesn’t just speed decisions — it deepens understanding and elevates confidence across the value chain.

Why data‑speed gives pharma a competitive edge — The ROI of Decision Velocity: Why Data Speed Defines Pharma’s Next Competitive Edge

The Perceptive Philosophy: Intelligence You Can Stand Behind

For us, responsible AI isn’t a tagline. It’s an operating principle.

We build intelligence that earns trust — for every stakeholder, across every decision layer.

- For Scientists:

A transparent lab partner that accelerates discovery, clarifies drivers, and strengthens hypotheses — without ever obscuring the science. - For Auditors:

A system with a complete, auditable trail — every data point, every feature, every model version captured, traceable, and defensible. - For Executives:

Confidence that innovation is advancing safely, predictably, and in full alignment with global regulatory expectations.

Because in pharma, the real measure of intelligence isn’t just accuracy.

It’s accountability.

The Future: Fast, Fair, and Faithful

The pharma leaders of 2026 won’t just deploy AI.

They’ll govern it.

They’ll know that speed without accountability isn’t progress — it’s risk.

Trustworthy AI doesn’t slow you down.

It allows you to go faster, safer, and smarter — with every stakeholder on your side.

“The next era of pharma intelligence won’t be defined by how powerful your AI is — but by how trusted it becomes.”

— Perceptive Analytics Leadership Team